machine learning features meaning

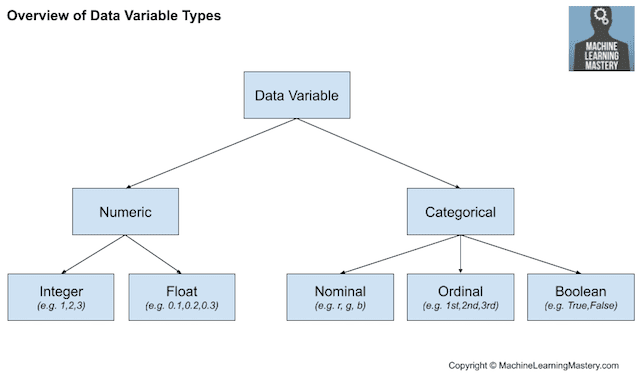

A feature map is a function which maps a data vector to feature space. A feature is an input variablethe x variable in simple linear regression.

What Are Feature Variables In Machine Learning Datarobot Ai Wiki

The concept of feature is related to that of explanatory variableus.

. X 1 x 2. Words in the email text. Machine learning can analyze the data entered into a system it oversees and instantly decide how it should be categorized sending it to storage servers.

In our dataset age had 55 unique values and this caused the algorithm to think that it was the most important feature. ML has been one of the fundamental fields of AI study since its inception. Answer 1 of 4.

Machine learning-enabled programs are able to learn grow and change by themselves when exposed to new data. Apart from choosing the right model for our data we need to choose the right data to put in our model. With the recent rise in popularity of quantum computing algorithms and public availability of first-generation quantum hardware it is of interest to assess their potential for efficiently handling.

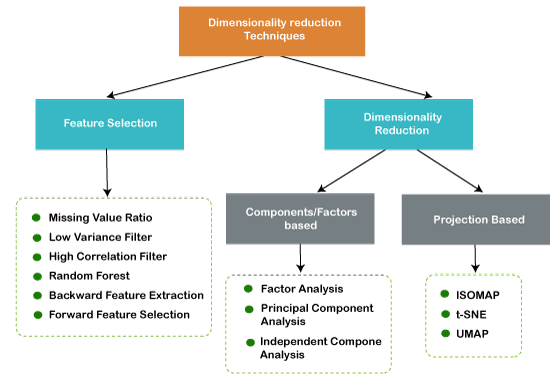

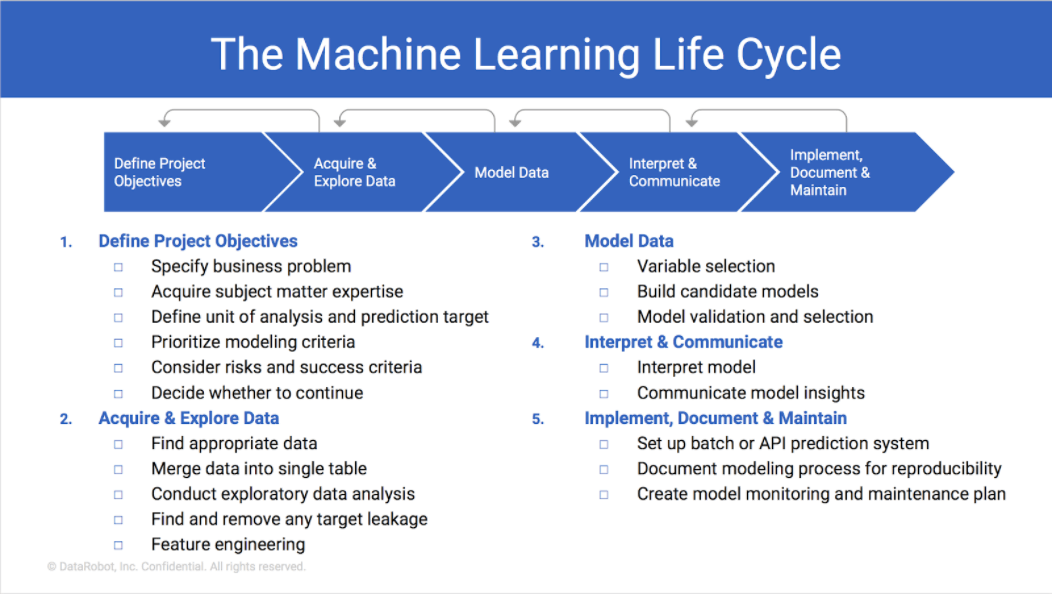

Consider a table which contains information on old cars. Organizations can use Machine Learning Operations ML Ops to tackle challenges and problems specific to various parts and stages of the machine learning development process. When approaching almost any unsupervised learning problem any problem where we are looking to cluster or segment our data points feature scaling is a fundamental step in order to asure we get the expected results.

Feature engineering is a machine learning technique that leverages data to create new variables that arent in the training set. Feature engineering is the process of using domain knowledge of the data to create features that make machine learning algorithms work. Feature Engineering for Machine Learning.

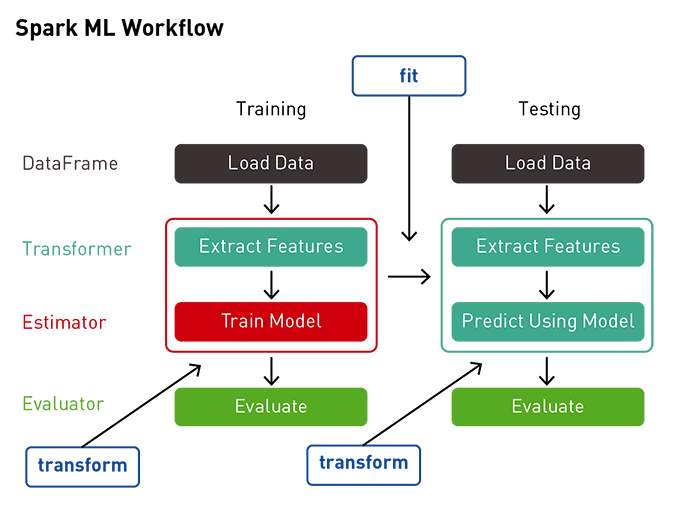

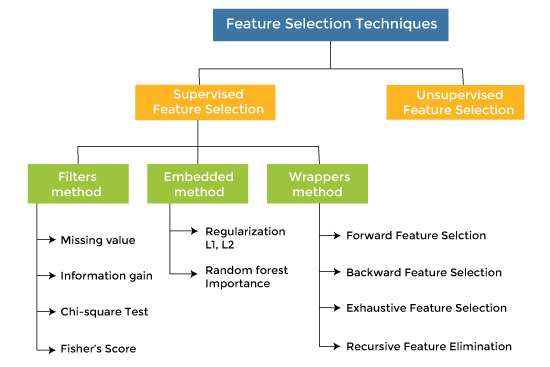

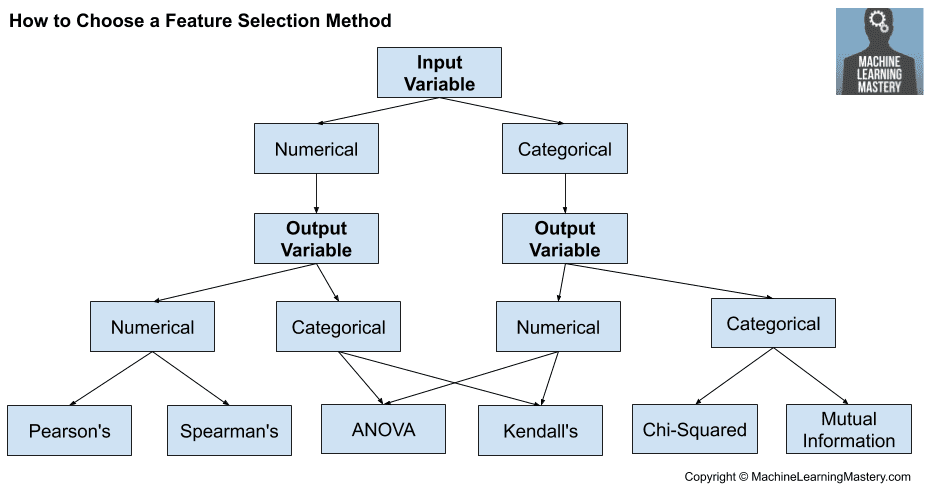

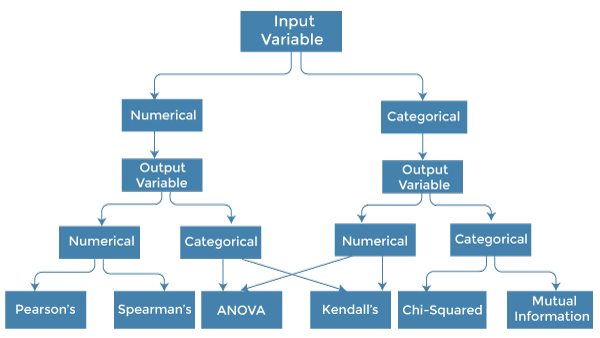

It is the automatic selection of attributes in your data such as columns in tabular data that are most relevant to the predictive modeling problem you are working on. The main logic in machine learning for doing so is to present your learning algorithm with data that it is better able to regress or classify. Feature importances form a critical part of machine learning interpretation and explainability.

Forgetting to use a feature scaling technique before any kind of model like K-means or DBSCAN can be fatal and completely bias. Feature selection is also called variable selection or attribute selection. Machine learning is an important component of the growing field of data science.

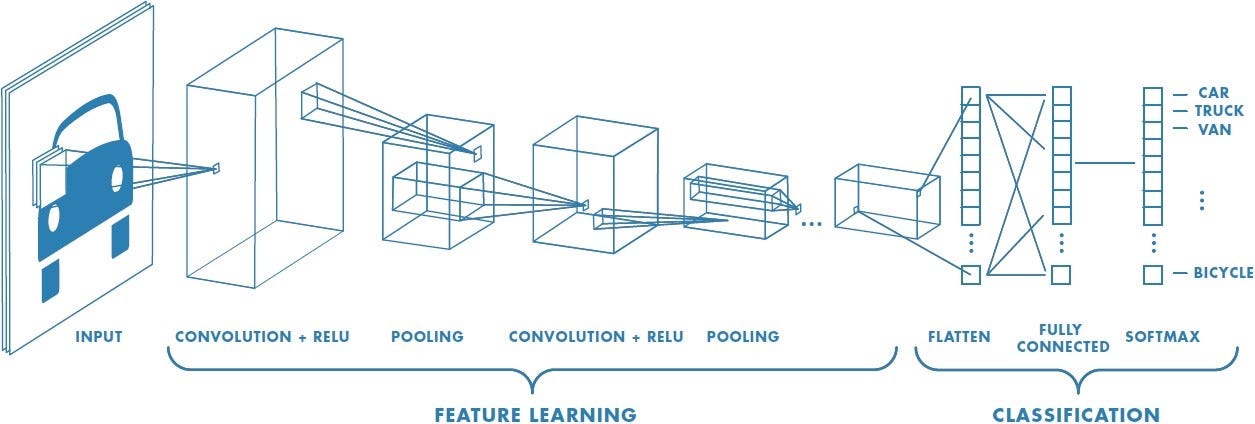

A feature is a measurable property of the object youre trying to analyze. Is a set of techniques that learn a feature. In machine learning new features can be easily obtained from old features.

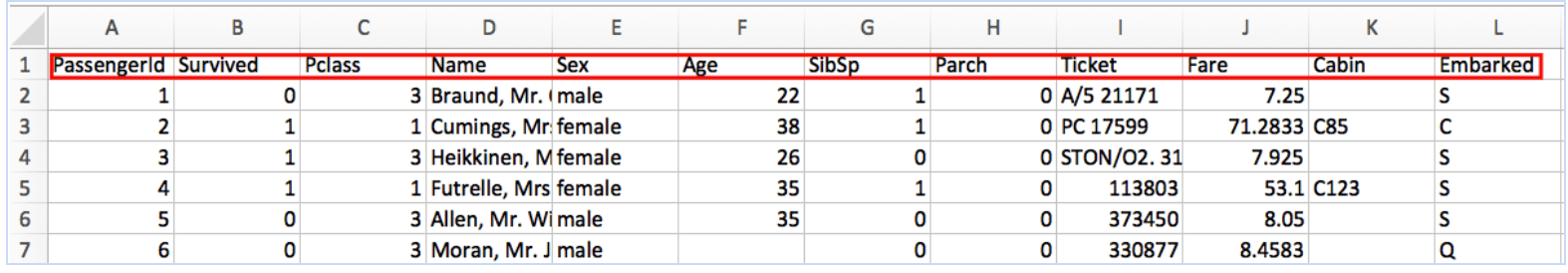

What is required to be learned in any specific machine learning problem is a set of these features independent variables coefficients of these features and parameters for coming up with appropriate functions or models also termed as. Feature engineering is the process of selecting and transforming variables when creating a predictive model using machine learning. In datasets features appear as columns.

Machine learning ML is a subset of AI that studies algorithms and models used by machines so they can perform certain tasks without explicit instructions and can improve performance through experience. Feature engineering in machine learning aims to improve the performance of models. With the help of this technology computers can find valuable information without.

Features are usually numeric but structural features such as strings and graphs are used in syntactic pattern recognition. Choosing informative discriminating and independent features is a crucial element of effective algorithms in pattern recognition classification and regression. Its a good way to enhance predictive models as it involves isolating key information highlighting patterns and bringing in someone with domain expertise.

Machine Learning As a. What is a Feature Variable in Machine Learning. We can include best practises into model creation testing and development frameworks because of our experience and knowledge.

Put simply machine learning is a subset of AI artificial intelligence and enables machines to step into a mode of self-learning without being programmed explicitly. In the spam detector example the features could include the following. It can produce new features for both supervised and unsupervised learning with the goal of simplifying and speeding up data transformations while also enhancing model accuracy.

How machine learning works. A transformation of raw data input to a representation that can be effectively exploited in machine learning tasks. Feature engineering is the pre-processing step of machine learning which is used to transform raw data into features that can be used for creating a predictive model using Machine learning or statistical Modelling.

The answer is Feature Selection. In Machine Learning feature learning or representation learning. The data used to create a predictive model consists of an.

Feature selection is the process of selecting a subset of relevant features for use in model. If feature engineering is done correctly it increases the. A simple machine learning project might use a single feature while a more sophisticated machine learning project could use millions of features specified as.

Driven by the development of machine learning ML and deep learning techniques prognostics and health management PHM has become a key aspect of reliability engineering research. Features are nothing but the independent variables in machine learning models. Through the use of statistical methods algorithms are trained to make classifications or predictions uncovering key insights within data mining projects.

What are features in machine learning. The model decides which cars must be. In machine learning and pattern recognition a feature is an individual measurable property or characteristic of a phenomenon.

Prediction models use features to make predictions. In machine learning features are input in your system with individual independent variables. This is because the feature importance method of random forest favors features that have high cardinality.

While making predictions models use these features. Features are individual independent variables that act as the input in your system.

Ensemble Methods In Machine Learning What Are They And Why Use Them By Evan Lutins Towards Data Science

What Is Machine Learning Definition How It Works Great Learning

What Is A Pipeline In Machine Learning How To Create One By Shashanka M Analytics Vidhya Medium

Introducing Scikit Learn Python Data Science Handbook

Feature Vector Brilliant Math Science Wiki

Feature Selection Techniques In Machine Learning Javatpoint

Feature Scaling Standardization Vs Normalization

A Comprehensive Hands On Guide To Transfer Learning With Real World Applications In Deep Learning By Dipanjan Dj Sarkar Towards Data Science

How To Choose A Feature Selection Method For Machine Learning

How To Choose A Feature Selection Method For Machine Learning

How To Choose A Feature Selection Method For Machine Learning

Ann Vs Cnn Vs Rnn Types Of Neural Networks

Introduction To Dimensionality Reduction Technique Javatpoint

Feature Vector Brilliant Math Science Wiki

What Is Machine Learning Definition How It Works Great Learning

Feature Selection Techniques In Machine Learning Javatpoint

Machine Learning Life Cycle Datarobot Artificial Intelligence Wiki

A Comprehensive Guide To Convolutional Neural Networks The Eli5 Way By Sumit Saha Towards Data Science